Happy Holidays!

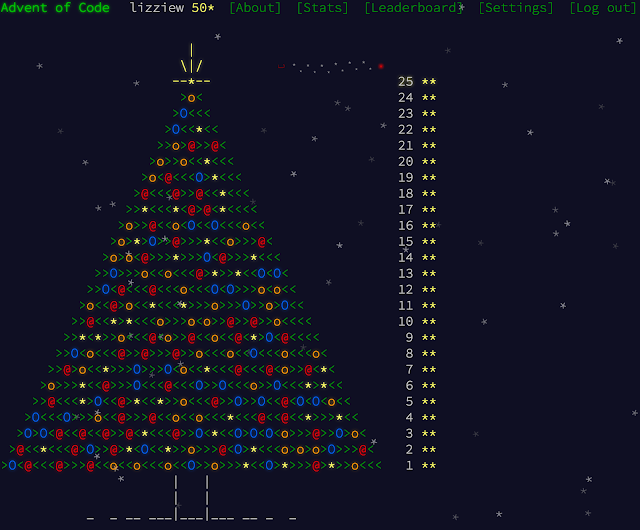

Just got back home from my first term at college! This fall, I took 6.01 (Introduction to EECS), 6.042 (Math for Computer Science), 8.02 (Physics II) and 21W.747 (Rhetoric). (Unfortunately, 8.02 met 9-11 am three days a week, which required a lot of coffee for me...) In addition to continuing squash, I joined a couple of clubs, SBC (Sloan Business Club, mitsbc.mit.edu) and Code for Good (codeforgood.mit.edu). They're both a fun way to learn/volunteer and to meet some pretty inspiring upperclassmen! Finally, I just started coding solutions to the puzzles on adventofcode.com yesterday, and am on day 16 now. The advent calendar is currently on day 21, so I have a lot of catching up to do! I'm pushing my code to github here: https://github.com/lizziew/playground/tree/master/AdventOfCode I'll be back at MIT pretty soon (Jan 4), but in the meantime, Happy Holidays (and watch the new Star Wars movie)! Update: Merry xmas! Advent of Code is officially over!